Even though Hawtio is a pretty awesome console for JBoss Fuse, sometimes you want or even need to have the underlying data Hawtio uses. Maybe you want to incorporate it in your existing dashboard, maybe you want to store it in a database so you have a historical record, or maybe you’re just curious and want to experiment with it.

The nice thing of JBoss Fuse and really the underlying community products, mainly Apache Camel and Apache ActiveMQ, is that they expose a lot of metrics and statistics via JMX. Now you could start your favorite IDE or text editor and write a slab of Java code to query the JMX metrics from Apache Camel and Apache ActiveMQ, like some sort of maniac. Or you could leverage the out of the box Jolokia features, like the people from Hawtio did, and really any sane person would do.

For those of you who never heard of Jolokia, in a nutshell its basically JMX over http/json. Which makes doing JMX almost fun again.

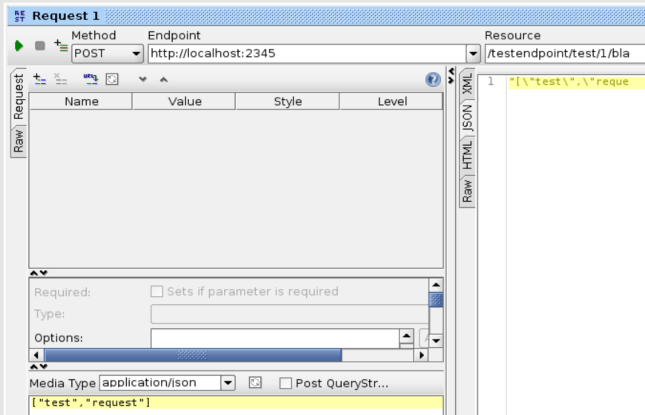

Now to start things off here are a couple Jolokia requests (and responses) to start you off. These are just plain http requests, do note they use basic authentication to authenticate against the JBoss Fuse server.

To start things of some statistics on the JVM:

The request for querying the heapsize of the JVM JBoss Fuse is running on:

http://localhost:8181/hawtio/jolokia/read/java.lang:type=Memory/HeapMemoryUsage

Which returns, on my local test machine, the following response:

{

"request":{

"mbean":"java.lang:type=Memory",

"attribute":"HeapMemoryUsage",

"type":"read"

},

"value":{

"init":536870912,

"committed":749207552,

"max":954728448,

"used":118298056

},

"timestamp":1469035168,

"status":200

}

Now we know how to query the Heap memory, fetching the NonHeap is pretty trivial:

http://localhost:8181/hawtio/jolokia/read/java.lang:type=Memory/NonHeapMemoryUsage

{

"request":{

"mbean":"java.lang:type=Memory",

"attribute":"NonHeapMemoryUsage",

"type":"read"

},

"value":{

"init":2555904,

"committed":120848384,

"max":-1,

"used":110152704

},

"timestamp":1469035298,

"status":200

}

Some additional threading statistics for the JVM:

http://localhost:8181/hawtio/jolokia/read/java.lang:type=Threading

which returns:

{

"request":{

"mbean":"java.lang:type=Threading",

"type":"read"

},

"value":{

"ThreadAllocatedMemorySupported":true,

"ThreadContentionMonitoringEnabled":false,

"TotalStartedThreadCount":125,

"CurrentThreadCpuTimeSupported":true,

"CurrentThreadUserTime":610000000,

"PeakThreadCount":108,

"AllThreadIds":[

150,

45,

123,

122,

121,

120,

119,

118,

117,

116,

115,

113,

111,

110,

109,

107,

106,

105,

104,

103,

102,

101,

100,

99,

98,

97,

96,

95,

94,

93,

92,

90,

87,

86,

84,

83,

82,

81,

80,

79,

78,

77,

76,

74,

73,

72,

71,

70,

69,

68,

67,

65,

62,

61,

60,

58,

57,

55,

54,

53,

51,

47,

46,

44,

42,

40,

39,

38,

37,

36,

35,

34,

33,

32,

31,

30,

28,

27,

26,

24,

25,

21,

20,

23,

22,

19,

18,

15,

14,

13,

12,

11,

4,

3,

2,

1

],

"ThreadAllocatedMemoryEnabled":true,

"CurrentThreadCpuTime":624340113,

"ObjectName":{

"objectName":"java.lang:type=Threading"

},

"ThreadContentionMonitoringSupported":true,

"ThreadCpuTimeSupported":true,

"ThreadCount":96,

"ThreadCpuTimeEnabled":true,

"ObjectMonitorUsageSupported":true,

"SynchronizerUsageSupported":true,

"DaemonThreadCount":70

},

"timestamp":1469035389,

"status":200

}

Now for some JBoss Fuse specific queries:

First some overall statistics for our ActiveMQ broker:

http://localhost:8181/hawtio/jolokia/read/org.apache.activemq:type=Broker,brokerName=amq

Which returns:

{

"request":{

"mbean":"org.apache.activemq:brokerName=amq,type=Broker",

"type":"read"

},

"value":{

"StatisticsEnabled":true,

"TotalConnectionsCount":0,

"StompSslURL":"",

"TransportConnectors":{

"openwire":"tcp:\/\/localhost:61616",

"stomp":"stomp+nio:\/\/pim-XPS-15-9530:61613"

},

"StompURL":"",

"TotalProducerCount":0,

"CurrentConnectionsCount":0,

"TopicProducers":[

],

"JMSJobScheduler":null,

"UptimeMillis":461929,

"TemporaryQueueProducers":[

],

"TotalDequeueCount":0,

"JobSchedulerStorePercentUsage":0,

"DurableTopicSubscribers":[

],

"QueueSubscribers":[

],

"AverageMessageSize":1024,

"BrokerVersion":"5.11.0.redhat-621084",

"TemporaryQueues":[

],

"BrokerName":"amq",

"MinMessageSize":1024,

"DynamicDestinationProducers":[

],

"OpenWireURL":"tcp:\/\/localhost:61616",

"TemporaryTopics":[

],

"JobSchedulerStoreLimit":0,

"TotalConsumerCount":0,

"MaxMessageSize":1024,

"TotalMessageCount":0,

"TempPercentUsage":0,

"TemporaryQueueSubscribers":[

],

"MemoryPercentUsage":0,

"SslURL":"",

"InactiveDurableTopicSubscribers":[

],

"StoreLimit":10737418240,

"QueueProducers":[

],

"VMURL":"vm:\/\/amq",

"TemporaryTopicProducers":[

],

"Topics":[

{

"objectName":"org.apache.activemq:brokerName=amq,destinationName=ActiveMQ.Advisory.MasterBroker,destinationType=Topic,type=Broker"

}

],

"Uptime":"7 minutes",

"BrokerId":"ID:pim-XPS-15-9530-35535-1469035012806-0:1",

"DataDirectory":"\/home\/pim\/apps\/jboss-fuse-6.2.1.redhat-084\/data\/amq",

"Persistent":true,

"TopicSubscribers":[

],

"MemoryLimit":668309914,

"Slave":false,

"TotalEnqueueCount":1,

"TempLimit":5368709120,

"TemporaryTopicSubscribers":[

],

"StorePercentUsage":0,

"Queues":[

]

},

"timestamp":1469035474,

"status":200

}

We can also zoom into a specific JMS destination on our broker, in this case the Queue named testQueue:

http://localhost:8181/hawtio/jolokia/read/org.apache.activemq:type=Broker,brokerName=amq,destinationType=Queue,destinationName=testQueue

{

"request":{

"mbean":"org.apache.activemq:brokerName=amq,destinationName=testQueue,destinationType=Queue,type=Broker",

"type":"read"

},

"value":{

"ProducerFlowControl":true,

"AlwaysRetroactive":false,

"Options":"",

"MemoryUsageByteCount":0,

"AverageBlockedTime":0.0,

"MemoryPercentUsage":0,

"CursorMemoryUsage":0,

"InFlightCount":0,

"Subscriptions":[

],

"CacheEnabled":true,

"ForwardCount":0,

"DLQ":false,

"AverageEnqueueTime":0.0,

"Name":"testQueue",

"MaxAuditDepth":10000,

"BlockedSends":0,

"TotalBlockedTime":0,

"MaxPageSize":200,

"QueueSize":0,

"PrioritizedMessages":false,

"MemoryUsagePortion":0.0,

"Paused":false,

"EnqueueCount":0,

"MessageGroups":{

},

"ConsumerCount":0,

"AverageMessageSize":0,

"CursorFull":false,

"MaxProducersToAudit":64,

"ExpiredCount":0,

"CursorPercentUsage":0,

"MinEnqueueTime":0,

"MinMessageSize":0,

"MemoryLimit":1048576,

"DispatchCount":0,

"MaxEnqueueTime":0,

"BlockedProducerWarningInterval":30000,

"DequeueCount":0,

"ProducerCount":0,

"MessageGroupType":"cached",

"MaxMessageSize":0,

"UseCache":true,

"SlowConsumerStrategy":null

},

"timestamp":1469035582,

"status":200

}

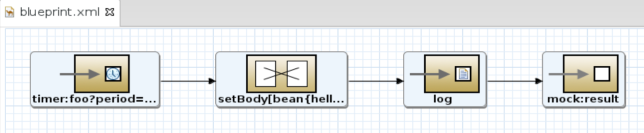

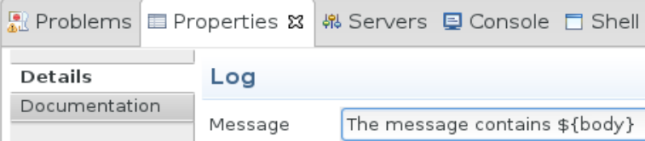

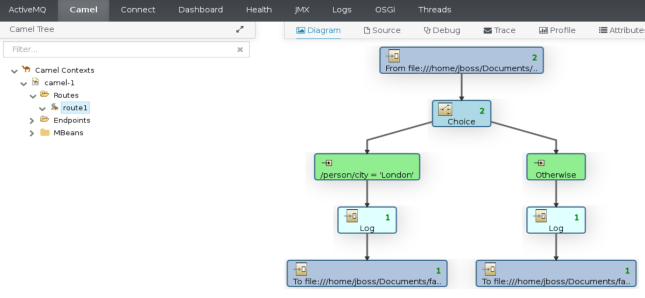

And next to ActiveMQ we can also retrieve some Camel statistics:

http://localhost:8181/hawtio/jolokia/read/org.apache.camel:context=*,type=routes,name=*/Load01,InflightExchanges,LastProcessingTime,ExchangesFailed

Which returns the metrics: “Load01”, “InflightExchanges”, “LastProcessingTime” and “ExchangesFailed” from all Camel routes in all Camel Contexts:

{

"request":{

"mbean":"org.apache.camel:context=*,name=*,type=routes",

"attribute":[

"Load01",

"InflightExchanges",

"LastProcessingTime",

"ExchangesFailed"

],

"type":"read"

},

"value":{

"org.apache.camel:context=camel-blueprint,name=\"route1\",type=routes":{

"LastProcessingTime":0,

"ExchangesFailed":0,

"InflightExchanges":0,

"Load01":""

},

"org.apache.camel:context=camel-blueprint,name=\"route3\",type=routes":{

"LastProcessingTime":0,

"ExchangesFailed":0,

"InflightExchanges":0,

"Load01":""

},

"org.apache.camel:context=camel-blueprint,name=\"route2\",type=routes":{

"LastProcessingTime":0,

"ExchangesFailed":0,

"InflightExchanges":0,

"Load01":""

}

},

"timestamp":1469035650,

"status":200

}

We can off course also remove some filters to get all available statisitics on the Camel routes:

http://localhost:8181/hawtio/jolokia/read/org.apache.camel:context=*,type=routes,name=*

which returns a lot more statistics:

{

"request":{

"mbean":"org.apache.camel:context=*,name=*,type=routes",

"type":"read"

},

"value":{

"org.apache.camel:context=camel-blueprint,name=\"route1\",type=routes":{

"StatisticsEnabled":true,

"EndpointUri":"rest:\/\/get:\/user:\/%7Bid%7D?description=Find+user+by+id&outType=nl.schiphol.my.camel.swagger.example.User&routeId=route1",

"CamelManagementName":"camel-blueprint",

"ExchangesCompleted":0,

"LastProcessingTime":0,

"ExchangesFailed":0,

"Description":null,

"FirstExchangeCompletedExchangeId":null,

"FirstExchangeCompletedTimestamp":null,

"LastExchangeFailureTimestamp":null,

"MaxProcessingTime":0,

"LastExchangeCompletedTimestamp":null,

"Load15":"",

"DeltaProcessingTime":0,

"OldestInflightDuration":null,

"ExternalRedeliveries":0,

"ExchangesTotal":0,

"ResetTimestamp":"2016-07-20T19:16:53+02:00",

"ExchangesInflight":0,

"MeanProcessingTime":0,

"LastExchangeFailureExchangeId":null,

"FirstExchangeFailureExchangeId":null,

"CamelId":"myCamel",

"TotalProcessingTime":0,

"FirstExchangeFailureTimestamp":null,

"RouteId":"route1",

"RoutePolicyList":"",

"FailuresHandled":0,

"MessageHistory":true,

"Load05":"",

"OldestInflightExchangeId":null,

"State":"Started",

"InflightExchanges":0,

"Redeliveries":0,

"MinProcessingTime":0,

"LastExchangeCompletedExchangeId":null,

"Tracing":false,

"Load01":""

},

"org.apache.camel:context=camel-blueprint,name=\"route3\",type=routes":{

"StatisticsEnabled":true,

"EndpointUri":"rest:\/\/get:\/user:\/findAll?description=Find+all+users&outType=nl.schiphol.my.camel.swagger.example.User%5B%5D&routeId=route3",

"CamelManagementName":"camel-blueprint",

"ExchangesCompleted":0,

"LastProcessingTime":0,

"ExchangesFailed":0,

"Description":null,

"FirstExchangeCompletedExchangeId":null,

"FirstExchangeCompletedTimestamp":null,

"LastExchangeFailureTimestamp":null,

"MaxProcessingTime":0,

"LastExchangeCompletedTimestamp":null,

"Load15":"",

"DeltaProcessingTime":0,

"OldestInflightDuration":null,

"ExternalRedeliveries":0,

"ExchangesTotal":0,

"ResetTimestamp":"2016-07-20T19:16:53+02:00",

"ExchangesInflight":0,

"MeanProcessingTime":0,

"LastExchangeFailureExchangeId":null,

"FirstExchangeFailureExchangeId":null,

"CamelId":"myCamel",

"TotalProcessingTime":0,

"FirstExchangeFailureTimestamp":null,

"RouteId":"route3",

"RoutePolicyList":"",

"FailuresHandled":0,

"MessageHistory":true,

"Load05":"",

"OldestInflightExchangeId":null,

"State":"Started",

"InflightExchanges":0,

"Redeliveries":0,

"MinProcessingTime":0,

"LastExchangeCompletedExchangeId":null,

"Tracing":false,

"Load01":""

},

"org.apache.camel:context=camel-blueprint,name=\"route2\",type=routes":{

"StatisticsEnabled":true,

"EndpointUri":"rest:\/\/put:\/user?description=Updates+or+create+a+user&inType=nl.schiphol.my.camel.swagger.example.User&routeId=route2",

"CamelManagementName":"camel-blueprint",

"ExchangesCompleted":0,

"LastProcessingTime":0,

"ExchangesFailed":0,

"Description":null,

"FirstExchangeCompletedExchangeId":null,

"FirstExchangeCompletedTimestamp":null,

"LastExchangeFailureTimestamp":null,

"MaxProcessingTime":0,

"LastExchangeCompletedTimestamp":null,

"Load15":"",

"DeltaProcessingTime":0,

"OldestInflightDuration":null,

"ExternalRedeliveries":0,

"ExchangesTotal":0,

"ResetTimestamp":"2016-07-20T19:16:53+02:00",

"ExchangesInflight":0,

"MeanProcessingTime":0,

"LastExchangeFailureExchangeId":null,

"FirstExchangeFailureExchangeId":null,

"CamelId":"myCamel",

"TotalProcessingTime":0,

"FirstExchangeFailureTimestamp":null,

"RouteId":"route2",

"RoutePolicyList":"",

"FailuresHandled":0,

"MessageHistory":true,

"Load05":"",

"OldestInflightExchangeId":null,

"State":"Started",

"InflightExchanges":0,

"Redeliveries":0,

"MinProcessingTime":0,

"LastExchangeCompletedExchangeId":null,

"Tracing":false,

"Load01":""

}

},

"timestamp":1469035760,

"status":200

}

Now there are probably tens or hundreds more queries to think of, but hopefully this will start you off in the right direction.