Recently I spent some time experimenting with the Fabric8 microservices framework. And while it is way too comprehensive to cover in a single blog post I wanted to focus on deploying an App/Microservice to a Fabric8 cluster.

For this post I am using a local fabric8 install using minikube, for more information see: http://fabric8.io/guide/getStarted/gofabric8.html

For details on to setup your fabric8 environment using minikube you can read the excellent blog post of my collegue Dirk Janssen here.

Also since I am doing quite a lot of work recently with Apache Camel using the CDI framework I the decision to deploy a Camel CDI based microservice was quickly made 🙂

So in this blog I will outline how to create a basic Camel/CDI based microservice using maven and deploy in on a kubernetes cluster using a fabric8 CD pipeline.

Generating the microservice

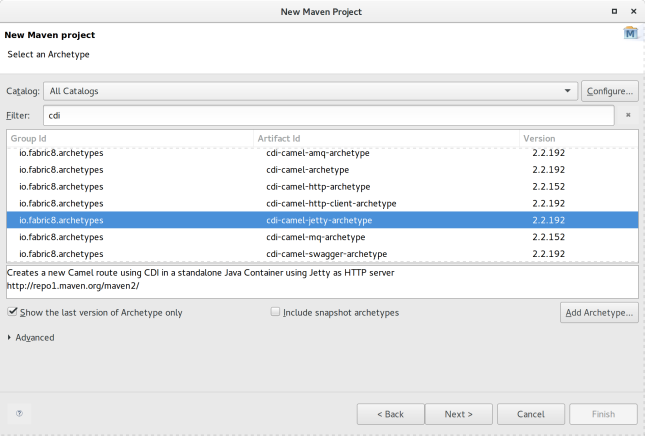

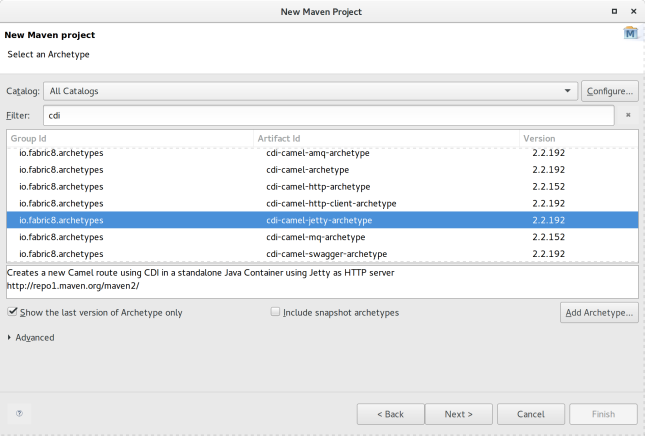

I used a maven archetype to quickly bootstrap the microservice. Now I used the Eclipse IDE to generate the project, but you can off course use the Maven CLI as well.

Fabric8 provides lots of different archetypes and quickstarts out of the box for various types of programming languages and especially for Java various frameworks. Here I am going with the cdi-camel-jetty-archetype. This archetype generates a Camel route exposed with a Jetty endpoint wired together using CDI.

Building and running locally

As with any Maven based application one of the first things to do after the project is created is executing:

$ mvn clean install

However initially the result was:

~/workspace/cdi-jetty-demo$ mvn install

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Fabric8 :: Quickstarts :: CDI :: Camel with Jetty as HTTP server 0.0.1-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ cdi-jetty-demo ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 2 resources

[INFO]

[INFO] --- fabric8-maven-plugin:3.2.8:resource (default) @ cdi-jetty-demo ---

[INFO] F8: Running in Kubernetes mode

[INFO] F8: Using resource templates from /home/pim/workspace/cdi-jetty-demo/src/main/fabric8

2016-12-28 11:54:14 INFO Version:30 - HV000001: Hibernate Validator 5.2.4.Final

[WARNING] F8: fmp-git: No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[WARNING] F8: fmp-git: No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[WARNING] F8: fmp-git: No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[WARNING] F8: fmp-git: No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[WARNING] F8: fmp-git: No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[WARNING] F8: fmp-git: No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 3.612 s

[INFO] Finished at: 2016-12-28T11:54:14+01:00

[INFO] Final Memory: 37M/533M

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal io.fabric8:fabric8-maven-plugin:3.2.8:resource (default) on project cdi-jetty-demo: Execution default of goal io.fabric8:fabric8-maven-plugin:3.2.8:resource failed: Container cdi-jetty-demo has no Docker image configured. Please check your Docker image configuration (including the generators which are supposed to run) -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/PluginExecutionException

Since the archetype did not have a template in cdi-jetty-demo/src/main/fabric8 and was still looking for it there it was throwing an error. After some looking around I managed to solve the issue by using a different version of the fabric8-maven-plugin.

Specifically:

<fabric8.maven.plugin.version>3.1.49</fabric8.maven.plugin.version>

Now running the clean install was executing successfully.

~/workspace/cdi-jetty-demo$ mvn clean install

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Fabric8 :: Quickstarts :: CDI :: Camel with Jetty as HTTP server 0.0.1-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ cdi-jetty-demo ---

[INFO] Deleting /home/pim/workspace/cdi-jetty-demo/target

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ cdi-jetty-demo ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 2 resources

[INFO]

[INFO] --- fabric8-maven-plugin:3.1.49:resource (default) @ cdi-jetty-demo ---

[INFO] F8> Running in Kubernetes mode

[INFO] F8> Running generator java-exec

[INFO] F8> Using resource templates from /home/pim/workspace/cdi-jetty-demo/src/main/fabric8

[WARNING] F8> No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[WARNING] F8> No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[INFO]

[INFO] --- maven-compiler-plugin:3.3:compile (default-compile) @ cdi-jetty-demo ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 1 source file to /home/pim/workspace/cdi-jetty-demo/target/classes

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ cdi-jetty-demo ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 1 resource

[INFO]

[INFO] --- maven-compiler-plugin:3.3:testCompile (default-testCompile) @ cdi-jetty-demo ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 1 source file to /home/pim/workspace/cdi-jetty-demo/target/test-classes

[INFO]

[INFO] --- maven-surefire-plugin:2.18.1:test (default-test) @ cdi-jetty-demo ---

[INFO]

[INFO] --- maven-jar-plugin:2.4:jar (default-jar) @ cdi-jetty-demo ---

[INFO] Building jar: /home/pim/workspace/cdi-jetty-demo/target/cdi-jetty-demo.jar

[INFO]

[INFO] >>> fabric8-maven-plugin:3.1.49:build (default) > initialize @ cdi-jetty-demo >>>

[INFO]

[INFO] <<< fabric8-maven-plugin:3.1.49:build (default) < initialize @ cdi-jetty-demo <<<

[INFO]

[INFO] --- fabric8-maven-plugin:3.1.49:build (default) @ cdi-jetty-demo ---

[INFO] F8> Running in Kubernetes mode

[INFO] F8> Running generator java-exec

[INFO] F8> Environment variable from gofabric8 : DOCKER_HOST=tcp://192.168.42.40:2376

[INFO] F8> Environment variable from gofabric8 : DOCKER_CERT_PATH=/home/pim/.minikube/certs

[INFO] F8> Pulling from fabric8/java-alpine-openjdk8-jdk

117f30b7ae3d: Already exists

f1011be98339: Pull complete

dae2abad9134: Pull complete

d1ea5cd75444: Pull complete

cec1e2f1c0f2: Pull complete

a9ec98a3bcba: Pull complete

38bbb9125eaa: Pull complete

66f50c07037b: Pull complete

868f8ddb8412: Pull complete

[INFO] F8> Digest: sha256:572ec2fdc9ac33bb1a8a5ee96c17eae9b7797666ed038c08b5c3583f98c1277f

[INFO] F8> Status: Downloaded newer image for fabric8/java-alpine-openjdk8-jdk:1.1.11

[INFO] F8> Pulled fabric8/java-alpine-openjdk8-jdk:1.1.11 in 47 seconds

Downloading: https://repo.fusesource.com/nexus/content/groups/public/org/apache/httpcomponents/httpclient/4.3.3/httpclient-4.3.3.pom

Downloading: https://maven.repository.redhat.com/ga/org/apache/httpcomponents/httpclient/4.3.3/httpclient-4.3.3.pom

Downloading: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/httpclient/4.3.3/httpclient-4.3.3.pom

Downloaded: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/httpclient/4.3.3/httpclient-4.3.3.pom (6 KB at 30.9 KB/sec)

Downloading: https://repo.fusesource.com/nexus/content/groups/public/org/apache/httpcomponents/httpmime/4.3.3/httpmime-4.3.3.pom

Downloading: https://maven.repository.redhat.com/ga/org/apache/httpcomponents/httpmime/4.3.3/httpmime-4.3.3.pom

Downloading: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/httpmime/4.3.3/httpmime-4.3.3.pom

Downloaded: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/httpmime/4.3.3/httpmime-4.3.3.pom (5 KB at 81.7 KB/sec)

Downloading: https://repo.fusesource.com/nexus/content/groups/public/org/apache/httpcomponents/httpclient-cache/4.3.3/httpclient-cache-4.3.3.pom

Downloading: https://maven.repository.redhat.com/ga/org/apache/httpcomponents/httpclient-cache/4.3.3/httpclient-cache-4.3.3.pom

Downloading: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/httpclient-cache/4.3.3/httpclient-cache-4.3.3.pom

Downloaded: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/httpclient-cache/4.3.3/httpclient-cache-4.3.3.pom (7 KB at 102.3 KB/sec)

Downloading: https://repo.fusesource.com/nexus/content/groups/public/org/apache/httpcomponents/fluent-hc/4.3.3/fluent-hc-4.3.3.pom

Downloading: https://maven.repository.redhat.com/ga/org/apache/httpcomponents/fluent-hc/4.3.3/fluent-hc-4.3.3.pom

Downloading: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/fluent-hc/4.3.3/fluent-hc-4.3.3.pom

Downloaded: https://repo.maven.apache.org/maven2/org/apache/httpcomponents/fluent-hc/4.3.3/fluent-hc-4.3.3.pom (5 KB at 80.4 KB/sec)

[INFO] Copying files to /home/pim/workspace/cdi-jetty-demo/target/docker/eos/cdi-jetty-demo/snapshot-161228-115614-0167/build/maven

[INFO] Building tar: /home/pim/workspace/cdi-jetty-demo/target/docker/eos/cdi-jetty-demo/snapshot-161228-115614-0167/tmp/docker-build.tar

[INFO] F8> docker-build.tar: Created [eos/cdi-jetty-demo:snapshot-161228-115614-0167] "java-exec" in 8 seconds

[INFO] F8> [eos/cdi-jetty-demo:snapshot-161228-115614-0167] "java-exec": Built image sha256:94fad

[INFO] F8> [eos/cdi-jetty-demo:snapshot-161228-115614-0167] "java-exec": Tag with latest

[INFO]

[INFO] --- maven-install-plugin:2.4:install (default-install) @ cdi-jetty-demo ---

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/cdi-jetty-demo.jar to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT.jar

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/pom.xml to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT.pom

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/openshift.yml to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-openshift.yml

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/openshift.json to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-openshift.json

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/kubernetes.yml to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-kubernetes.yml

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/kubernetes.json to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-kubernetes.json

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:03 min

[INFO] Finished at: 2016-12-28T11:57:15+01:00

[INFO] Final Memory: 61M/873M

[INFO] ------------------------------------------------------------------------

After the project built successfully I wanted to run it in my Kubernetes cluster.

Thankfully the guys at fabric8 also took this into consideration, so by executing the maven goal fabric8:run you app will be booted into a Docker container and louched as a Pod in the Kubernetes cluster just for some local testing.

~/workspace/cdi-jetty-demo$ mvn fabric8:run

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Fabric8 :: Quickstarts :: CDI :: Camel with Jetty as HTTP server 0.0.1-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] >>> fabric8-maven-plugin:3.1.49:run (default-cli) > install @ cdi-jetty-demo >>>

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ cdi-jetty-demo ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 2 resources

[INFO]

[INFO] --- fabric8-maven-plugin:3.1.49:resource (default) @ cdi-jetty-demo ---

[INFO] F8> Running in Kubernetes mode

[INFO] F8> Running generator java-exec

[INFO] F8> Using resource templates from /home/pim/workspace/cdi-jetty-demo/src/main/fabric8

[WARNING] F8> No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[WARNING] F8> No .git/config file could be found so cannot annotate kubernetes resources with git commit SHA and branch

[INFO]

[INFO] --- maven-compiler-plugin:3.3:compile (default-compile) @ cdi-jetty-demo ---

[INFO] Nothing to compile - all classes are up to date

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ cdi-jetty-demo ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 1 resource

[INFO]

[INFO] --- maven-compiler-plugin:3.3:testCompile (default-testCompile) @ cdi-jetty-demo ---

[INFO] Nothing to compile - all classes are up to date

[INFO]

[INFO] --- maven-surefire-plugin:2.18.1:test (default-test) @ cdi-jetty-demo ---

[INFO]

[INFO] --- maven-jar-plugin:2.4:jar (default-jar) @ cdi-jetty-demo ---

[INFO] Building jar: /home/pim/workspace/cdi-jetty-demo/target/cdi-jetty-demo.jar

[INFO]

[INFO] >>> fabric8-maven-plugin:3.1.49:build (default) > initialize @ cdi-jetty-demo >>>

[INFO]

[INFO] <<< fabric8-maven-plugin:3.1.49:build (default) < initialize @ cdi-jetty-demo <<<

[INFO]

[INFO] --- fabric8-maven-plugin:3.1.49:build (default) @ cdi-jetty-demo ---

[INFO] F8> Running in Kubernetes mode

[INFO] F8> Running generator java-exec

[INFO] F8> Environment variable from gofabric8 : DOCKER_HOST=tcp://192.168.42.40:2376

[INFO] F8> Environment variable from gofabric8 : DOCKER_CERT_PATH=/home/pim/.minikube/certs

[INFO] Copying files to /home/pim/workspace/cdi-jetty-demo/target/docker/eos/cdi-jetty-demo/snapshot-161228-122841-0217/build/maven

[INFO] Building tar: /home/pim/workspace/cdi-jetty-demo/target/docker/eos/cdi-jetty-demo/snapshot-161228-122841-0217/tmp/docker-build.tar

[INFO] F8> docker-build.tar: Created [eos/cdi-jetty-demo:snapshot-161228-122841-0217] "java-exec" in 5 seconds

[INFO] F8> [eos/cdi-jetty-demo:snapshot-161228-122841-0217] "java-exec": Built image sha256:bccd3

[INFO] F8> [eos/cdi-jetty-demo:snapshot-161228-122841-0217] "java-exec": Tag with latest

[INFO]

[INFO] --- maven-install-plugin:2.4:install (default-install) @ cdi-jetty-demo ---

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/cdi-jetty-demo.jar to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT.jar

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/pom.xml to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT.pom

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/openshift.yml to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-openshift.yml

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/openshift.json to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-openshift.json

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/kubernetes.yml to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-kubernetes.yml

[INFO] Installing /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/kubernetes.json to /home/pim/.m2/repository/nl/rubix/eos/cdi-jetty-demo/0.0.1-SNAPSHOT/cdi-jetty-demo-0.0.1-SNAPSHOT-kubernetes.json

[INFO]

[INFO] <<< fabric8-maven-plugin:3.1.49:run (default-cli) < install @ cdi-jetty-demo <<<

[INFO]

[INFO] --- fabric8-maven-plugin:3.1.49:run (default-cli) @ cdi-jetty-demo ---

[INFO] F8> Using Kubernetes at https://192.168.42.40:8443/ in namespace default with manifest /home/pim/workspace/cdi-jetty-demo/target/classes/META-INF/fabric8/kubernetes.yml

[INFO] Using namespace: default

[INFO] Creating a Service from kubernetes.yml namespace default name cdi-jetty-demo

[INFO] Created Service: target/fabric8/applyJson/default/service-cdi-jetty-demo.json

[INFO] Creating a Deployment from kubernetes.yml namespace default name cdi-jetty-demo

[INFO] Created Deployment: target/fabric8/applyJson/default/deployment-cdi-jetty-demo.json

[INFO] hint> Use the command `kubectl get pods -w` to watch your pods start up

[INFO] F8> Watching pods with selector LabelSelector(matchExpressions=[], matchLabels={project=cdi-jetty-demo, provider=fabric8, group=nl.rubix.eos}, additionalProperties={}) waiting for a running pod...

[INFO] New Pod> cdi-jetty-demo-1244207563-s9xn2 status: Pending

[INFO] New Pod> cdi-jetty-demo-1244207563-s9xn2 status: Running

[INFO] New Pod> Tailing log of pod: cdi-jetty-demo-1244207563-s9xn2

[INFO] New Pod> Press Ctrl-C to scale down the app and stop tailing the log

[INFO] New Pod>

[INFO] Pod> exec java -javaagent:/opt/agent-bond/agent-bond.jar=jolokia{{host=0.0.0.0}},jmx_exporter{{9779:/opt/agent-bond/jmx_exporter_config.yml}} -cp .:/app/* org.apache.camel.cdi.Main

[INFO] Pod> I> No access restrictor found, access to any MBean is allowed

[INFO] Pod> Jolokia: Agent started with URL http://172.17.0.6:8778/jolokia/

[INFO] Pod> 2016-12-28 11:28:52.953:INFO:ifasjipjsoejs.Server:jetty-8.y.z-SNAPSHOT

[INFO] Pod> 2016-12-28 11:28:53.001:INFO:ifasjipjsoejs.AbstractConnector:Started SelectChannelConnector@0.0.0.0:9779

[INFO] Pod> 2016-12-28 11:28:53,142 [main ] INFO Version - WELD-000900: 2.3.3 (Final)

[INFO] Pod> 2016-12-28 11:28:53,412 [main ] INFO Bootstrap - WELD-000101: Transactional services not available. Injection of @Inject UserTransaction not available. Transactional observers will be invoked synchronously.

[INFO] Pod> 2016-12-28 11:28:53,605 [main ] INFO Event - WELD-000411: Observer method [BackedAnnotatedMethod] private org.apache.camel.cdi.CdiCamelExtension.processAnnotatedType(@Observes ProcessAnnotatedType<?>) receives events for all annotated types. Consider restricting events using @WithAnnotations or a generic type with bounds.

[INFO] Pod> 2016-12-28 11:28:54,809 [main ] INFO DefaultTypeConverter - Loaded 196 type converters

[INFO] Pod> 2016-12-28 11:28:54,861 [main ] INFO CdiCamelExtension - Camel CDI is starting Camel context [myJettyCamel]

[INFO] Pod> 2016-12-28 11:28:54,863 [main ] INFO DefaultCamelContext - Apache Camel 2.18.1 (CamelContext: myJettyCamel) is starting

[INFO] Pod> 2016-12-28 11:28:54,864 [main ] INFO ManagedManagementStrategy - JMX is enabled

[INFO] Pod> 2016-12-28 11:28:55,030 [main ] INFO DefaultRuntimeEndpointRegistry - Runtime endpoint registry is in extended mode gathering usage statistics of all incoming and outgoing endpoints (cache limit: 1000)

[INFO] Pod> 2016-12-28 11:28:55,081 [main ] INFO DefaultCamelContext - StreamCaching is not in use. If using streams then its recommended to enable stream caching. See more details at http://camel.apache.org/stream-caching.html

[INFO] Pod> 2016-12-28 11:28:55,120 [main ] INFO log - Logging initialized @2850ms

[INFO] Pod> 2016-12-28 11:28:55,170 [main ] INFO Server - jetty-9.2.19.v20160908

[INFO] Pod> 2016-12-28 11:28:55,199 [main ] INFO ContextHandler - Started o.e.j.s.ServletContextHandler@28cb9120{/,null,AVAILABLE}

[INFO] Pod> 2016-12-28 11:28:55,206 [main ] INFO ServerConnector - Started ServerConnector@2da1c45f{HTTP/1.1}{0.0.0.0:8080}

[INFO] Pod> 2016-12-28 11:28:55,206 [main ] INFO Server - Started @2936ms

[INFO] Pod> 2016-12-28 11:28:55,225 [main ] INFO DefaultCamelContext - Route: route1 started and consuming from: jetty:http://0.0.0.0:8080/camel/hello

[INFO] Pod> 2016-12-28 11:28:55,226 [main ] INFO DefaultCamelContext - Total 1 routes, of which 1 are started.

[INFO] Pod> 2016-12-28 11:28:55,228 [main ] INFO DefaultCamelContext - Apache Camel 2.18.1 (CamelContext: myJettyCamel) started in 0.363 seconds

[INFO] Pod> 2016-12-28 11:28:55,238 [main ] INFO Bootstrap - WELD-ENV-002003: Weld SE container STATIC_INSTANCE initialized

^C[INFO] F8> Stopping the app:

[INFO] F8> Scaling Deployment default/cdi-jetty-demo to replicas: 0

[INFO] Pod> 2016-12-28 11:29:52,307 [Thread-12 ] INFO MainSupport$HangupInterceptor - Received hang up - stopping the main instance.

[INFO] Pod> 2016-12-28 11:29:52,313 [Thread-12 ] INFO CamelContextProducer - Camel CDI is stopping Camel context [myJettyCamel]

[INFO] Pod> 2016-12-28 11:29:52,313 [Thread-12 ] INFO DefaultCamelContext - Apache Camel 2.18.1 (CamelContext: myJettyCamel) is shutting down

[INFO] Pod> 2016-12-28 11:29:52,314 [Thread-12 ] INFO DefaultShutdownStrategy - Starting to graceful shutdown 1 routes (timeout 300 seconds)

[INFO] Pod> 2016-12-28 11:29:52,347 [ - ShutdownTask] INFO ServerConnector - Stopped ServerConnector@2da1c45f{HTTP/1.1}{0.0.0.0:8080}

[INFO] Pod> 2016-12-28 11:29:52,350 [ - ShutdownTask] INFO ContextHandler - Stopped o.e.j.s.ServletContextHandler@28cb9120{/,null,UNAVAILABLE}

[INFO] Pod> 2016-12-28 11:29:52,353 [ - ShutdownTask] INFO DefaultShutdownStrategy - Route: route1 shutdown complete, was consuming from: jetty:http://0.0.0.0:8080/camel/hello

There are two things to note:

thirst the App is not by default exposed outside of the Kubernetes cluster. I will explain how to do this later on in this blog after we have completely deployed the app in our cluster.

Second, the fabric8:run goal starts the container and tails the log in the foreground, whenever you close the app by hitting ctrl+c your app is undeployed in the cluster automatically. To tweak this behavior check: https://maven.fabric8.io

Deploying our microservice in the fabric8 cluster

After running and testing our microservice locally we are ready to fully deploy our microservice in our cluster. For this we also start with a Maven command, mvn fabric8:import will import the application (templates) in the Kubernetes cluster and push the sources of the microservice to the fabric8 git repository based on Gogs.

~/workspace/cdi-jetty-demo$ mvn fabric8:import

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Fabric8 :: Quickstarts :: CDI :: Camel with Jetty as HTTP server 0.0.1-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- fabric8-maven-plugin:3.1.49:import (default-cli) @ cdi-jetty-demo ---

[INFO] F8> Running 1 endpoints of gogs in namespace default

[INFO] F8> Creating Namespace user-secrets-source-minikube with labels: {provider=fabric8, kind=secrets}

Please enter your username for git repo gogs: gogsadmin

Please enter your password/access token for git repo gogs: RedHat$1

[INFO] F8> Creating Secret user-secrets-source-minikube/default-gogs-git

[INFO] F8> Creating ConfigMap fabric8-git-app-secrets in namespace user-secrets-source-minikube

[INFO] F8> git username: gogsadmin password: ******** email: pim@rubix.nl

[INFO] Trusting all SSL certificates

[INFO] Initialised an empty git configuration repo at /home/pim/workspace/cdi-jetty-demo

[INFO] creating git repository client at: http://192.168.42.40:30616

[INFO] Using remoteUrl: http://192.168.42.40:30616/gogsadmin/cdi-jetty-demo.git and remote name origin

[INFO] About to git commit and push to: http://192.168.42.40:30616/gogsadmin/cdi-jetty-demo.git and remote name origin

[INFO] Using UsernamePasswordCredentialsProvider{user: gogsadmin, password length: 8}

[INFO] Creating a BuildConfig for namespace: default project: cdi-jetty-demo

[INFO] F8> You can view the project dashboard at: http://192.168.42.40:30482/workspaces/default/projects/cdi-jetty-demo

[INFO] F8> To configure a CD Pipeline go to: http://192.168.42.40:30482/workspaces/default/projects/cdi-jetty-demo/forge/command/devops-edit

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 19.643 s

[INFO] Finished at: 2016-12-28T12:30:27+01:00

[INFO] Final Memory: 26M/494M

[INFO] ------------------------------------------------------------------------

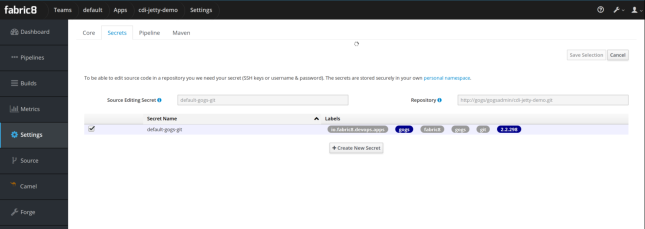

After the successful execution of the import goal we need to finish the setup further in the fabric8 console.

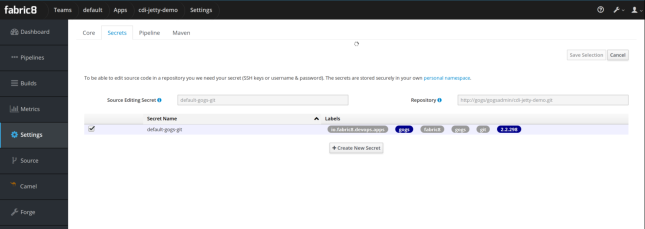

First we need to setup a Kubernetes secret to authenticate to the Gogs Git repository.

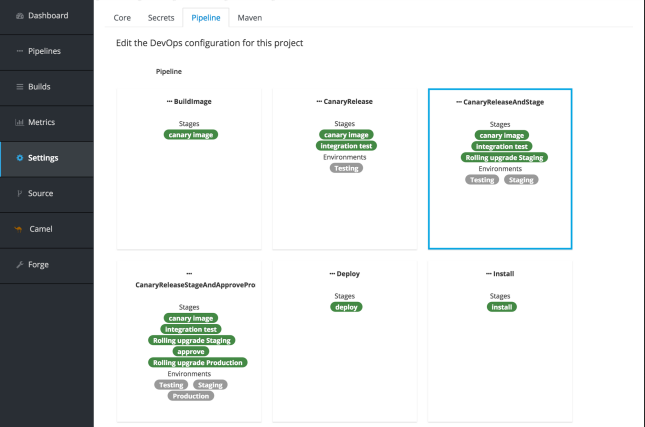

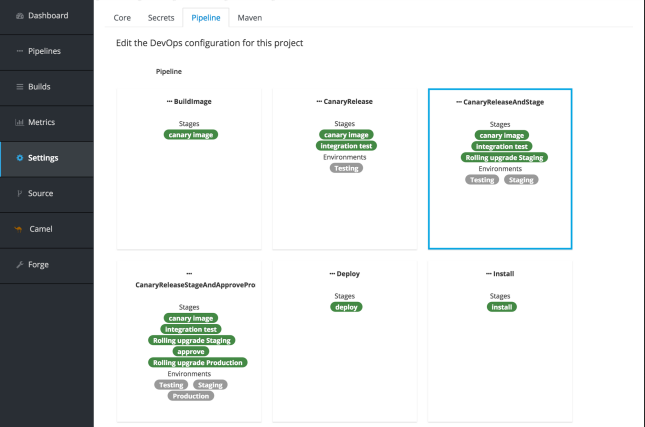

Next we can select a CD pipeline.

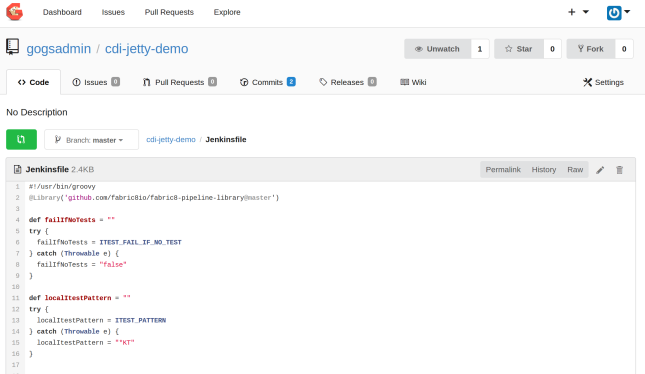

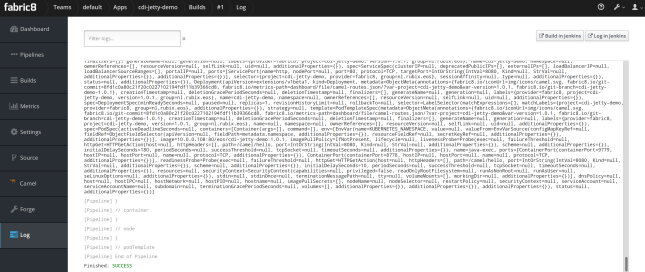

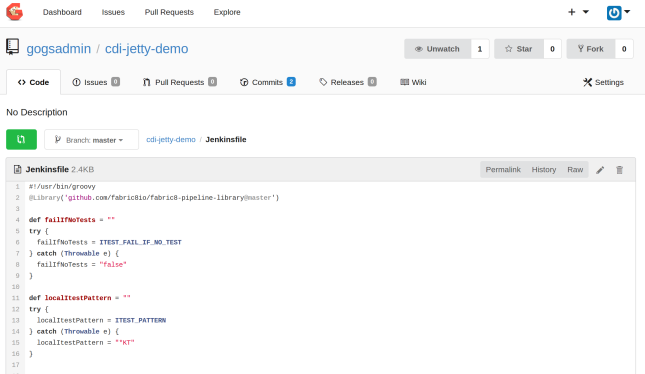

After we have selected a CD pipeline suited for the lifecycle of our Microservice the Jenkinsfile will be committed in Git repository of our microservice. And after that Jenkins will be configured and the Jenkins CD pipeline will start its initial job.

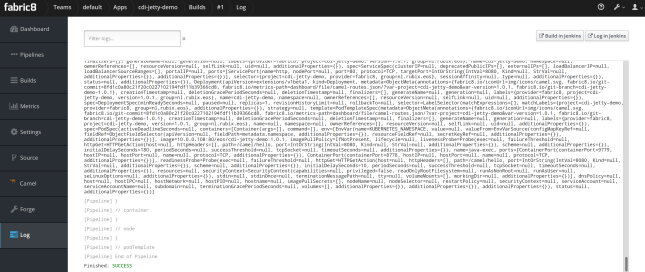

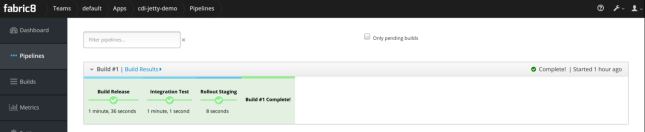

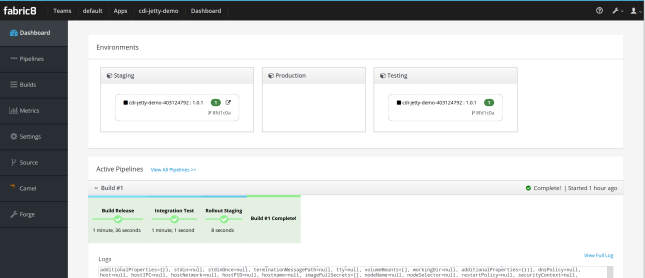

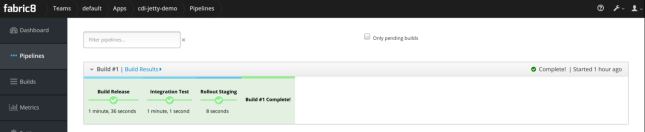

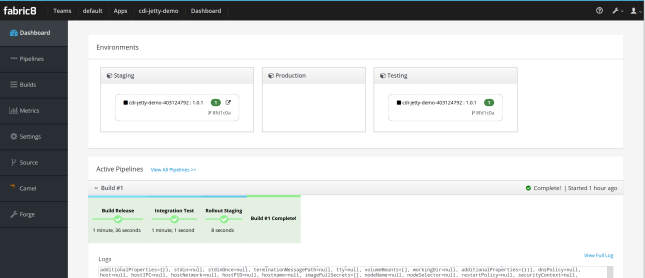

If everything goes fine the entire Jenkins pipeline will finish successfully.

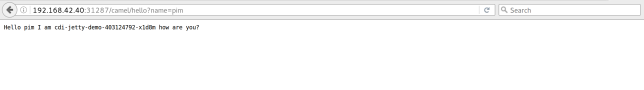

Now our microservice is running in the test and staging environments, which are created if they did not already exist. However like mentioned above the microservice is not yet exposed outside of the Kubernetes cluster. This is because the Kubernetes template used has the following setting in the service configuration:

type: ClusterIP

change this to and your app will be exposed outside of the Kubernetes cluster ready to be consumed by external parties:

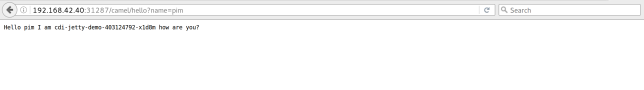

type: NodePort

This means the microservice can be called via a browser:

If everything went fine the fabric8 dashboard for the app will look something like this:

Like I mentioned above, the selection of the CD pipeline will add the Jenkinsfile to the Git repository, you can see it (and edit it off course if you whish) in the Gogs Git repo:

Some final thoughts

Being able to quickly deploy functionality across different environments and not have to worry about runtime config, app servers, and manually hacking CD pipelines is definitely a major advantage for every app developer. For this reason the fabric8 framework in combination with Kubernetes really has some good potential. Using fabric8 locally with minikube did cause some stability issues, which I have not fully identified yet, but I’m sure this will improve with every new version coming up.