In ActiveMQ it is possible to define logical groups of message brokers in order to obtain more resiliency or throughput.

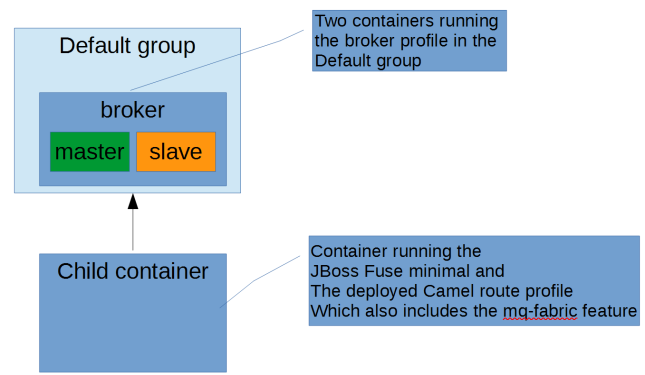

The setup configuration described here can be outlined as follows:

Creating broker groups and a network of brokers can be done in various manners in JBoss Fuse Fabric. Here we are going to use the Fabric CLI.

The following steps are necessary to create the configuration above:

-

Creating a Fabric (if we don’t already have one)

-

Create child containers

-

Create the MQ broker profiles and assign them to the child containers

-

Connect a couple of clients for testing

1. Creating a Fabric (optional)

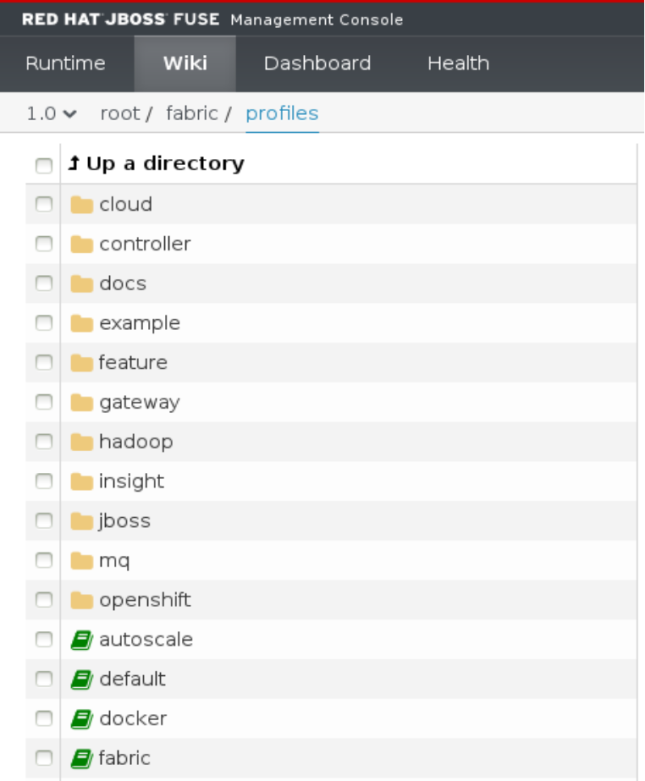

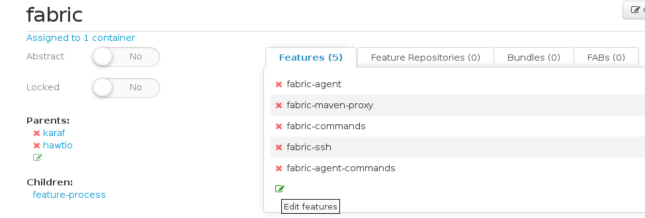

Assuming we start with a clean Fuse installation the first step is creating a Fabric. This step can be skipped if a fabric is already available.

In the Fuse CLI execute the following command:

JBossFuse:karaf@root> fabric:create -p fabric --wait-for-provisioning

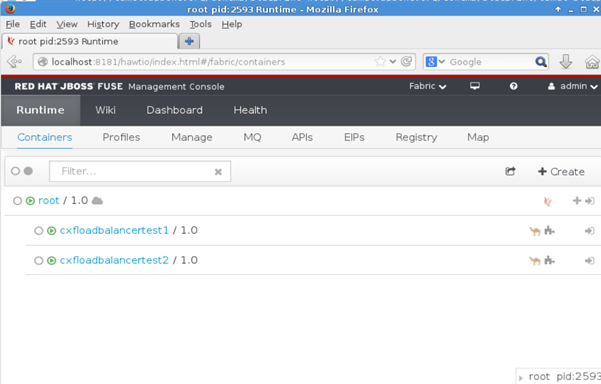

2. Create child containers

Next we are going to create two sets of child containers which are going to host our brokers. Note, the child containers we are going to create in this step are empty child containers and do not yet contain AMQ brokers. We are going to provision these containers with AMQ brokers in step 3.

First create the child containers for siteA:

JBossFuse:karaf@root> fabric:container-create-child root site-a 2

Next create the child containers for siteB:

JBossFuse:karaf@root> fabric:container-create-child root site-b 2

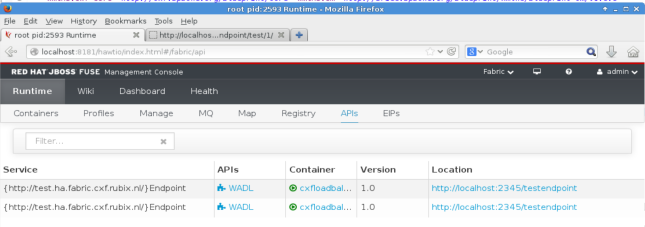

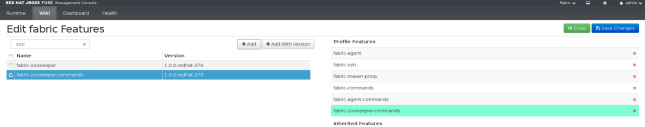

3. Create the MQ broker profiles and assign them to the child containers

In this step we are going to create the broker profiles in fabric and assign them to the containers we created in the previous step.

JBossFuse:karaf@root> fabric:mq-create --group site-a --networks site-b --networks-username admin --networks-password admin --assign-container site-a1,site-a2 site-a-profile JBossFuse:karaf@root> fabric:mq-create --group site-b --networks site-a --networks-username admin --networks-password admin --assign-container site-b1,site-b2 site-b-profile

The fabric:mq-create command creates a broker profile in Fuse Fabric. The –group flag assigns a group to the brokers in the profile. The networks flag creates the required network connection needed for a network of brokers. In the assign-container flag we assign this newly created broker profile to one or more containers.

4.Connect a couple of clients for testing

A sample project containing two clients, one producer and one consumer is available on github.

Clone the repository:

$ git clone https://github.com/pimg/mq-fabric-client.git

build the project:

$ mvn clean install

Start the message consumer (in the java-consumer directory):

$ mvn exec:java

Start the message producer (in the java-producer directory):

$ mvn exec:java

Observe the console logging of the producer:

14:48:58 INFO Using local ZKClient 14:48:58 INFO Starting 14:48:58 INFO Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT 14:48:58 INFO Client environment:host.name=pim-XPS-15-9530 14:48:58 INFO Client environment:java.version=1.8.0_77 14:48:58 INFO Client environment:java.vendor=Oracle Corporation 14:48:58 INFO Client environment:java.home=/home/pim/apps/jdk1.8.0_77/jre 14:48:58 INFO Client environment:java.class.path=/home/pim/apps/apache-maven-3.3.9/boot/plexus-classworlds-2.5.2.jar 14:48:58 INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 14:48:58 INFO Client environment:java.io.tmpdir=/tmp 14:48:58 INFO Client environment:java.compiler=<NA> 14:48:58 INFO Client environment:os.name=Linux 14:48:58 INFO Client environment:os.arch=amd64 14:48:58 INFO Client environment:os.version=4.2.0-34-generic 14:48:58 INFO Client environment:user.name=pim 14:48:58 INFO Client environment:user.home=/home/pim 14:48:58 INFO Client environment:user.dir=/home/pim/workspace/mq-fabric/java-producer 14:48:58 INFO Initiating client connection, connectString=localhost:2181 sessionTimeout=60000 watcher=org.apache.curator.ConnectionState@47e011e3 14:48:58 INFO Opening socket connection to server localhost/127.0.0.1:2181 14:48:58 INFO Socket connection established to localhost/127.0.0.1:2181, initiating session 14:48:58 INFO Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x154620540e80009, negotiated timeout = 40000 14:48:58 INFO State change: CONNECTED 14:48:59 INFO Adding new broker connection URL: tcp://10.0.3.1:38417 14:49:00 INFO Successfully connected to tcp://10.0.3.1:38417 14:49:00 INFO Sending to destination: queue://fabric.simple this text: 1. message sent 14:49:00 INFO Sending to destination: queue://fabric.simple this text: 2. message sent 14:49:01 INFO Sending to destination: queue://fabric.simple this text: 3. message sent 14:49:01 INFO Sending to destination: queue://fabric.simple this text: 4. message sent 14:49:02 INFO Sending to destination: queue://fabric.simple this text: 5. message sent 14:49:02 INFO Sending to destination: queue://fabric.simple this text: 6. message sent 14:49:03 INFO Sending to destination: queue://fabric.simple this text: 7. message sent 14:49:03 INFO Sending to destination: queue://fabric.simple this text: 8. message sent 14:49:04 INFO Sending to destination: queue://fabric.simple this text: 9. message sent

Observe the console logging of the consumer:

14:48:20 INFO Using local ZKClient 14:48:20 INFO Starting 14:48:20 INFO Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT 14:48:20 INFO Client environment:host.name=pim-XPS-15-9530 14:48:20 INFO Client environment:java.version=1.8.0_77 14:48:20 INFO Client environment:java.vendor=Oracle Corporation 14:48:20 INFO Client environment:java.home=/home/pim/apps/jdk1.8.0_77/jre 14:48:20 INFO Client environment:java.class.path=/home/pim/apps/apache-maven-3.3.9/boot/plexus-classworlds-2.5.2.jar 14:48:20 INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 14:48:20 INFO Client environment:java.io.tmpdir=/tmp 14:48:20 INFO Client environment:java.compiler=<NA> 14:48:20 INFO Client environment:os.name=Linux 14:48:20 INFO Client environment:os.arch=amd64 14:48:20 INFO Client environment:os.version=4.2.0-34-generic 14:48:20 INFO Client environment:user.name=pim 14:48:20 INFO Client environment:user.home=/home/pim 14:48:20 INFO Client environment:user.dir=/home/pim/workspace/mq-fabric/java-consumer 14:48:20 INFO Initiating client connection, connectString=localhost:2181 sessionTimeout=60000 watcher=org.apache.curator.ConnectionState@3d732a14 14:48:20 INFO Opening socket connection to server localhost/127.0.0.1:2181 14:48:20 INFO Socket connection established to localhost/127.0.0.1:2181, initiating session 14:48:20 INFO Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x154620540e80008, negotiated timeout = 40000 14:48:20 INFO State change: CONNECTED 14:48:21 INFO Adding new broker connection URL: tcp://10.0.3.1:38417 14:48:21 INFO Successfully connected to tcp://10.0.3.1:38417 14:48:21 INFO Start consuming messages from queue://fabric.simple with 120000ms timeout 14:49:00 INFO Got 1. message: 1. message sent 14:49:00 INFO Got 2. message: 2. message sent 14:49:01 INFO Got 3. message: 3. message sent 14:49:01 INFO Got 4. message: 4. message sent 14:49:02 INFO Got 5. message: 5. message sent 14:49:02 INFO Got 6. message: 6. message sent 14:49:03 INFO Got 7. message: 7. message sent 14:49:03 INFO Got 8. message: 8. message sent 14:49:04 INFO Got 9. message: 9. message sent